Chatting with someone on Facebook, I felt compelled to write the following in response. I’ve updated it a little to show some additional reflection on the topic.

A Tired Argument

What a tired argument:

Without AI Literacy, Students Will Be ‘Unprepared for the Future,’ Educators Say (source).

Isn’t this always the same argument employed? Why not come up with something else more accurate…let’s see:

- Cynical: Our children need to know this so schools can spend money on Big Tech stuff that has no research to support it’s use in K-16.

- Hopeful: Our children need to know how to engineer prompts to learn how to wield AI interfaces that will control even more powerful technologies

- Job-Oriented: Our children need to learn how to use AI as soon as possible so businesses can leverage teenagers to do AI-powered work in minimum wage jobs (hmm…a touch of cynicism in this one, too)

The problem I have with AI with children? It helps them short-circuit the productive struggle they need to engage in. Having been an edtech (maybe you prefer “Ed Tech” or “ed tech”) advocate for years, I have to ask myself, “Does educational technology really serve the interests of children engaged in learning, or that of companies trying to sell expensive products to schools to make a profit?”

As a classroom teacher, an educator, misleading people to sell a product “just for profit” to make a buck is wrong. Of course, it’s a fine line between misleading with false advertising (“AI can personalize learning and every child needs a ChatGPT account”) to helping people understand how it can make their work easier, facilitate productivity (“AI saves me time and effort that I can put to use building relationships with colleagues and students”).

Follow the Money Trail

It’s a “follow the money” situation for AI for students. More users, more money. Worse, it short-circuits the necessary process of learning.

That said, teachers and staff can definitely use AI to alleviate their workload and rely on AI as a thought partner to enhance thinking and efficiency.

Meta-Tool Maker

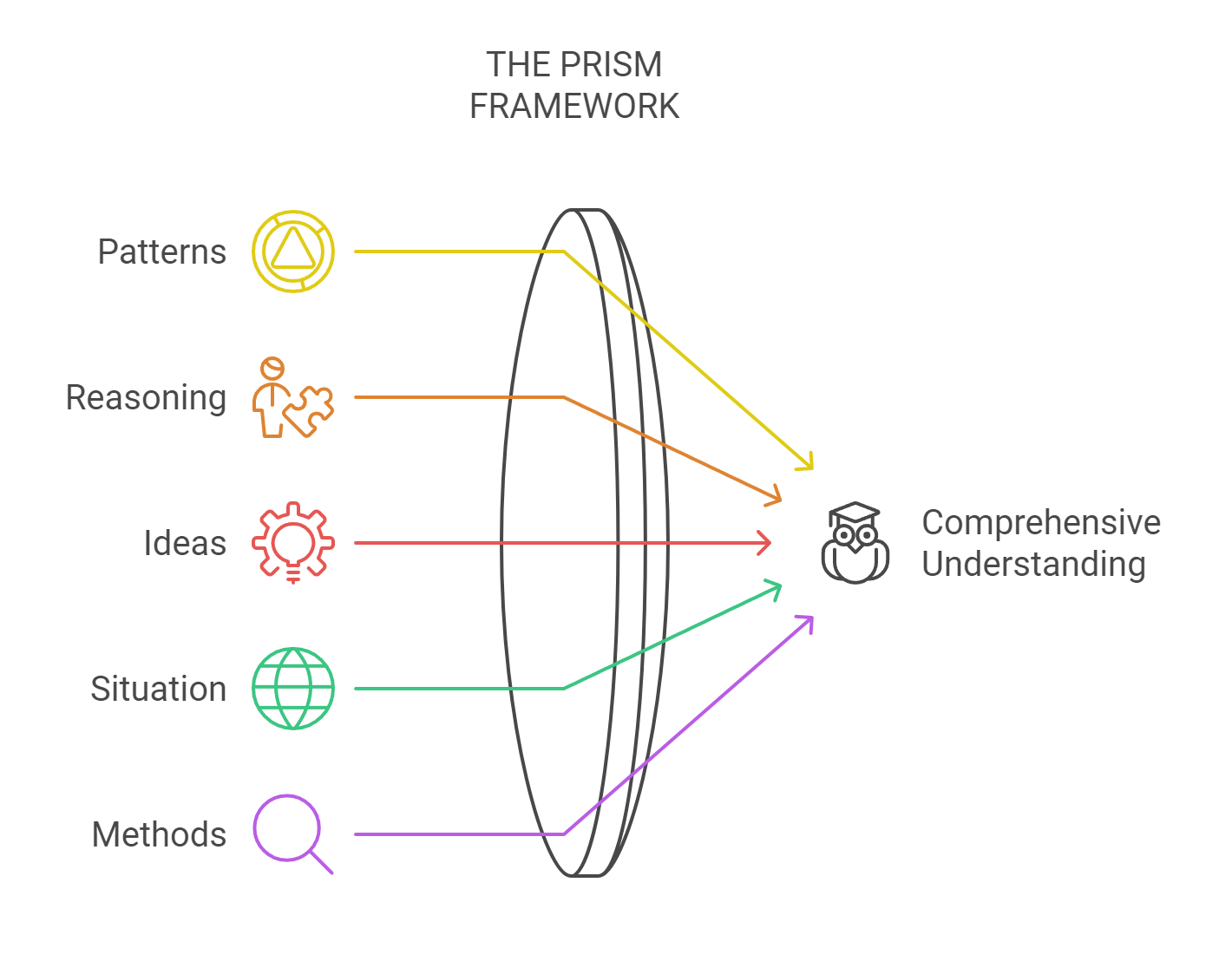

I have found AI quite helpful. It assists me in scaffolding my thinking. I have a blog series (pending publication) that explores the PRISM Framework, which is based on the SOLO Taxonomy. It has been helpful when examining new ideas. You can see a blog entry about PRISM for middle school science here.

| PRISM Element | Core Question | Deep Questions |

|---|---|---|

| P - Patterns | What patterns do you see? | • What big ideas keep showing up? • How do these patterns work in different places? • What general patterns help us understand better? |

| R - Reasoning | How do things fit together? | • How do pieces connect to tell the whole story? • What makes sense when you look at everything? • How do different parts work together? |

| I - Ideas | What different ideas can we mix? | • What happens when we try new ways of thinking? • What other ideas should we explore? • How do different viewpoints help us understand? |

| S - Situation | What’s the bigger picture? | • How does this connect to other things? • What else affects what’s happening? • What’s important beyond what we first see? |

| M - Methods | How can we check our answers? | • What other ways could explain this? • How do we know which answer is right? • What different ways can we solve this? |

What’s more, I have taken existing models like FLOATER, Orwell’s Test and used AI to apply these in analyzing news and assertions. Helpful, useful, and faster application than I could do… especially as a custom GPT. By doing my own analysis of generated content, I get a clearer picture of ideas and my own thinking. Quite helpful.

Generating lessons, activities, presentations, formatting diagrams with AI has also been a time-saver and enables faster achievement of new concept tools…a meta-tool to make more effective ones.

For education, the question isn’t should we introduce AI. Rather, it is when and how to do so without interfering with necessary productive struggle needed for learning.

Right now, Big Tech wants it everywhere so they can recoup their investment, getting access to k-12 funding. I do not see the value of allowing them or similar edtech that is unproven and less effective than high-effect size instructional strategies.

All that aside, I respect the work and advocacy of AI in education critics. The problem with AI is not only the hype, but the effects on the environment and human exploitation that often goes unmentioned. Of course, I could be totally wrong. These are my opinions and not really supported by research. Time will tell. In the meantime, should we be experimenting with unproven edtech when proven instructional strategies are available?