3-2-1: The Unknown Toll of AI

This morning, while reading this response on Threads on my new, consolidated account, I found myself wondering, “How many resources does Perplexity, or Claude or ChatGPT, consume when I ask a simple question, like, ‘What’s the scientific consensus on XYZ?” And, should I even be asking that question and using AI to research it? Then, I imagine a host of similar inane questions being asked at “Intro to AI” workshops around the globe, not to mention more serious questions that demand even greater processing.

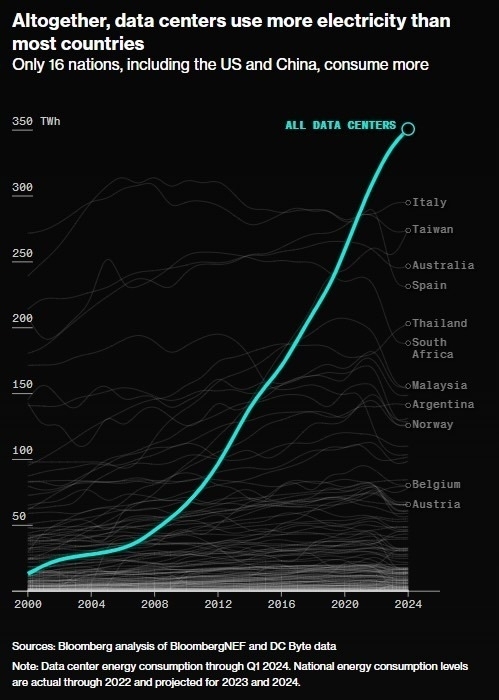

Image Source: leadlagreport via Threads

Check out the accompanying article from Bloomberg…it has some amazing pics and message.

Over at The Lever, they ask this probing question:

As artificial intelligence guzzles water supplies and jacks up consumers’ electricity rates, why isn’t anyone tracking the resources being consumed?

Perplexity.ai suggests:

- Generating a single image using a powerful AI model like Stable Diffusion XL can consume as much energy as fully charging a smartphone.

- Text generation tasks using AI models consume less energy than image generation, but still more than traditional text-based services. For example, generating text with AI uses about 0.047 kWh per 1,000 inferences, equivalent to 3.5 minutes of Netflix streaming.

- The energy consumption of AI services is much higher than the energy used for model training. Popular models like ChatGPT can exceed their training emissions in just a couple of weeks of regular use.

- Overall, adding AI-generated answers to all Google searches could potentially consume as much electricity as the entire country of Ireland.

Those are sobering facts. A few more questions seem worth asking:

- How can we balance the benefits of AI technology with its significant energy consumption and carbon footprint? Should we?

- What responsibility do AI companies have in ensuring their systems are environmentally sustainable? Should they bear the total cost of this or be subsidized by the government (maybe they are already!)?

- Should there be regulations or standards in place to limit the environmental impact of AI development and deployment?

It’s all worth considering.

3-2-1

Here’s a 3-2-1 summary of the article about the energy consumption of AI-powered search:

3 Quotes:

-

“Each time you search for something like ‘how many rocks should I eat’ and Google’s AI ‘snapshot’ tells you ‘at least one small rock per day,’ you’re consuming approximately three watt-hours of electricity”[1]

-

“That’s ten times the power consumption of a traditional Google search, and roughly equivalent to the amount of power used when talking for an hour on a home phone”[1]

-

“Collectively, de Vries calculates that adding AI-generated answers to all Google searches could easily consume as much electricity as the country of Ireland”[1]

2 Facts:

-

Google introduced AI-generated summaries to search results in early May 2024[1].

-

According to Alex de Vries, founder of Digiconomist, an AI-powered search consumes about three watt-hours of electricity[1].

1 Question:

Given the significant increase in energy consumption for AI-powered searches, how can tech companies balance the benefits of AI integration with environmental sustainability?

Citations: [1] www.levernews.com/the-unknown-toll-of-the-ai-takeover/ [2] www.metafilter.com/204279/Th… [3] www.levernews.com [4] www.levernews.com/lever-weekly-ais-unquenchable-thirst/ [5] www.linkedin.com/posts/loi…